Is the "GPU Era" Ending? My Thoughts on the Shift to Inference

I recently watched a video by Theo Browne (t3dotgg) that explored the controversial idea of whether we’re approaching the "end of the GPU era."

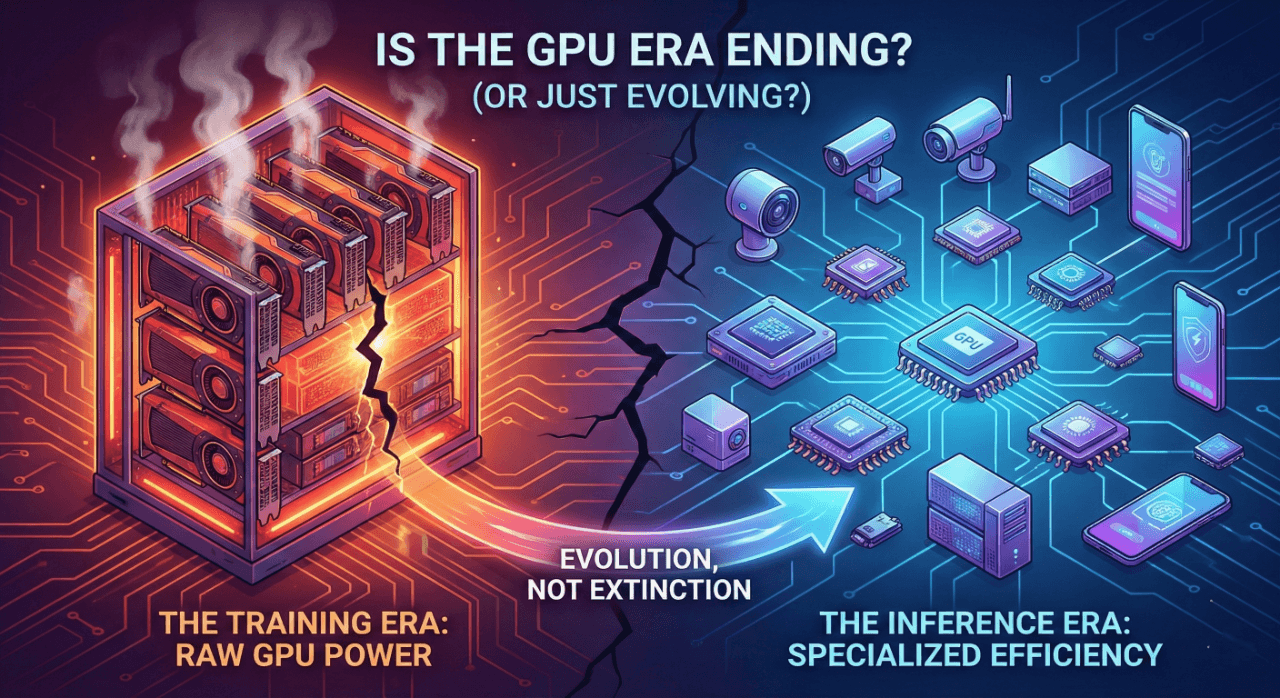

The title is bold, but the discussion highlights a critical shift in where computing is heading. It’s not that GPUs are disappearing; it’s that their role is evolving as AI moves from experimentation to production.

Training vs. Inference One key takeaway is the massive difference between training models and running them.

Training is compute-heavy and requires raw GPU power.

Inference (running the model for users) happens at a massive scale, where efficiency and power consumption matter more than raw speed.

Because of this, we are seeing a move toward specialized hardware (like LPUs or dedicated accelerators) that complements GPUs rather than replacing them.

What this means for developers As a developer, I find this shift towards heterogeneous computing exciting. It pushes us to think more about system design and efficiency. We can't just throw raw compute at every problem anymore; we have to choose the right architecture for the specific task.

The GPU era isn’t ending. It’s just growing up.

Comments

Be the first to leave a comment!